Lanxiang HuI'm a PhD candidate at UCSD, fortunately advised by Prof. Hao Zhang and Prof. Tajana Šimunić Rosing. My research interest is in building efficient and reliable AI models and systems at scale. Prevoiusly I was an research intern at Snowflake AI research, where I work on agents and efficient inference. Before joining UCSD, I have spent wonderful time working as a visiting reseach intern with Prof. Song Han. I completed my undergrad degree at UC Berkeley with majors in CS and Physics. Currently my work lie in efficient AI and AI system evaluation. My prior work spans the following areas:

|

News

|

Selected Publications |

|

* denotes for equal contribution. |

|

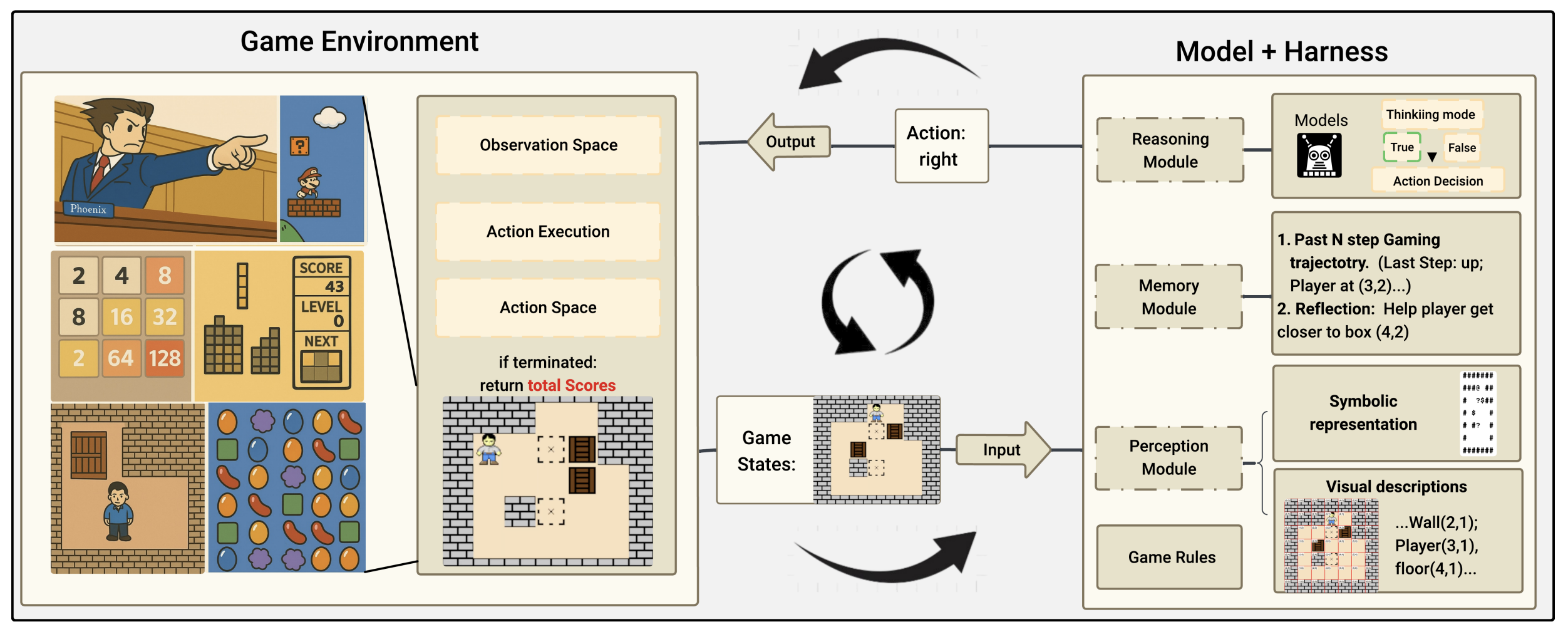

Lmgame-Bench: How Good are LLMs at Playing Games?Lanxiang Hu*, Mingjia Huo*, Yuxuan Zhang†, Haoyang Yu†, Eric P. Xing, Ion Stoica, Tajana Rosing, Haojian Jin, Hao Zhang ICLR, 2026 arxiv / code / website / We introduce lmgame-bench that evaluates latest large models with games and addresses evaluation challenges by providing scaffolds. We present quantitative analysis of the relationship between model gaming performance and results on existing benchmarks. |

|

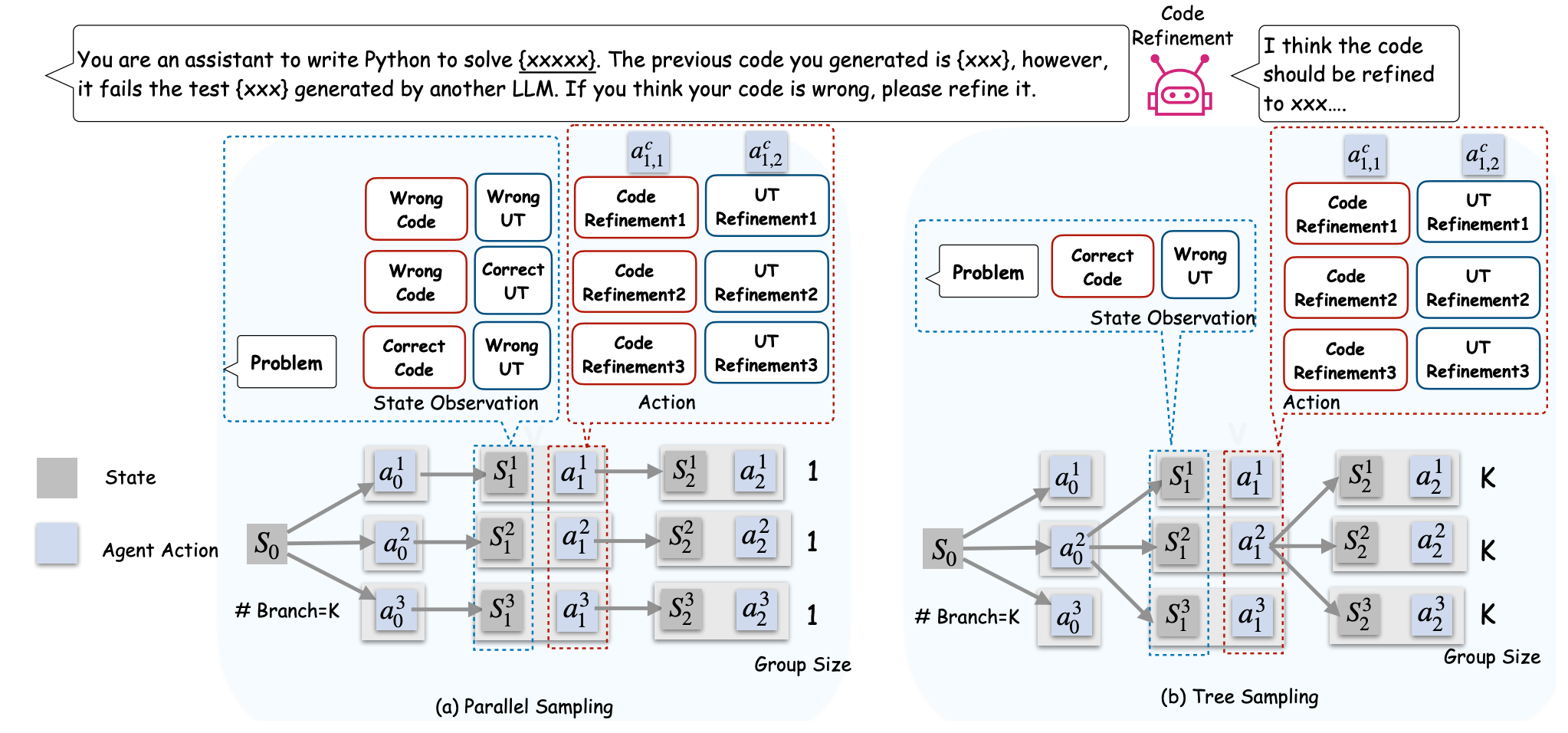

Stronger-MAS: Multi-Agent Reinforcement Learning for Collaborative LLMsYujie Zhao, Lanxiang Hu, Yang Wang, Minmin Hou, Hao Zhang, Ke Ding, Jishen Zhao ICLR, 2026 arxiv / code / website / We introduce an on-policy RL algorithm, AT-GRPO and a training system for multi-agent system training. Stronger-MAS boosts planning task accuracy from a 14.0–47.0% from single-agent RL baseline to 96.0–99.5%. It also improves reasoning performance with an average gain of 7.62% on coding and 17.93% on math. |

|

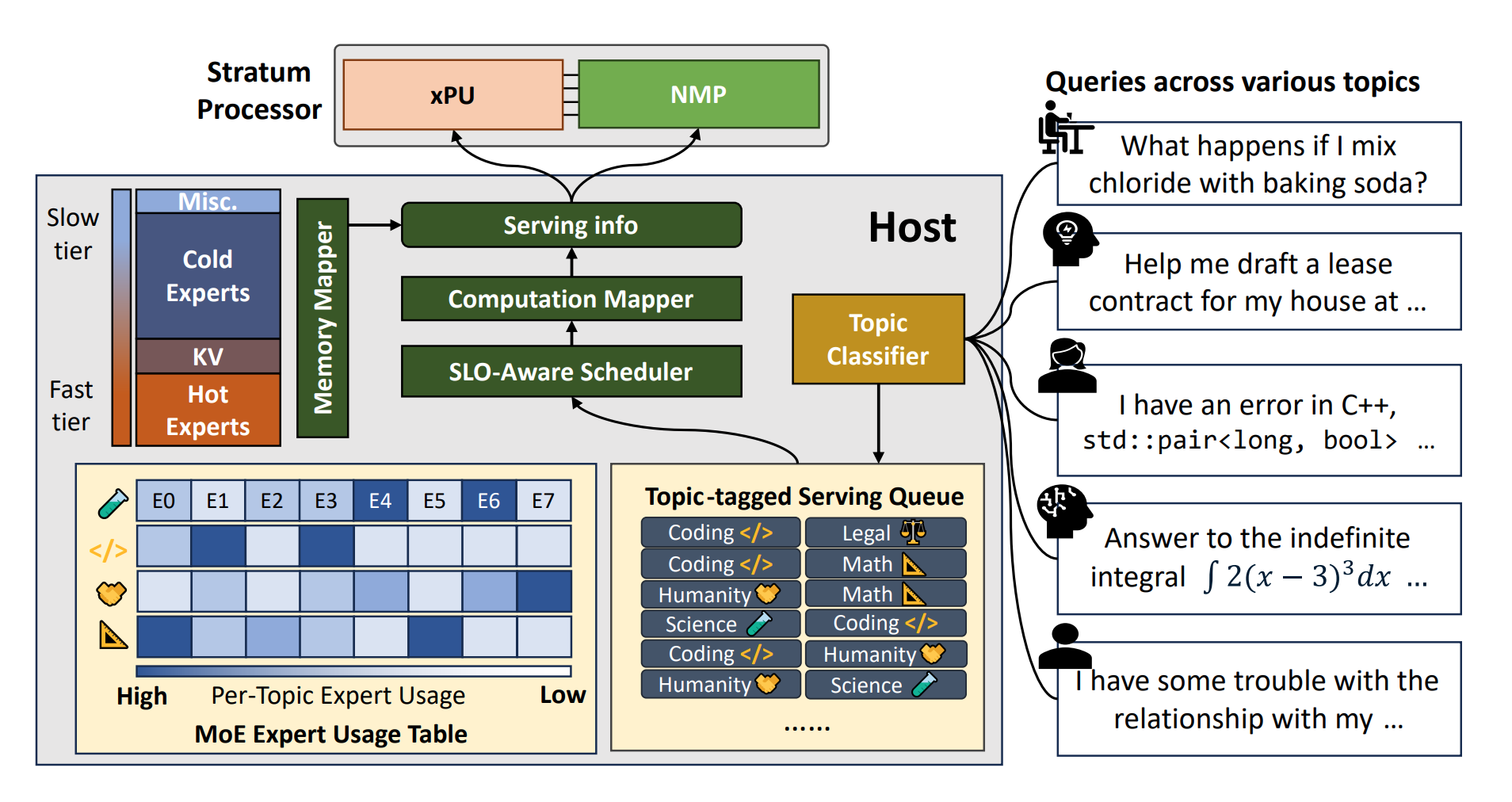

Stratum: System-Hardware Co-Design with Tiered Monolithic 3D-Stackable DRAM for Efficient MoE ServingYue Pan*, Zihan Xia*, Po-Kai Hsu, Lanxiang Hu, Hyungyo Kim, Janak Sharda, Minxuan Zhou, Nam Sung Kim, Shimeng Yu, Tajana Rosing, Mingu Kang MICRO, 2025 arxiv / We present Stratum, a system–hardware co-design that enables efficient MoE serving by combining Mono3D DRAM, near-memory processing, and GPUs with access-aware data placement, achieving up to 8.29x higher throughput and 7.66x better energy efficiency than GPU-only baselines. |

|

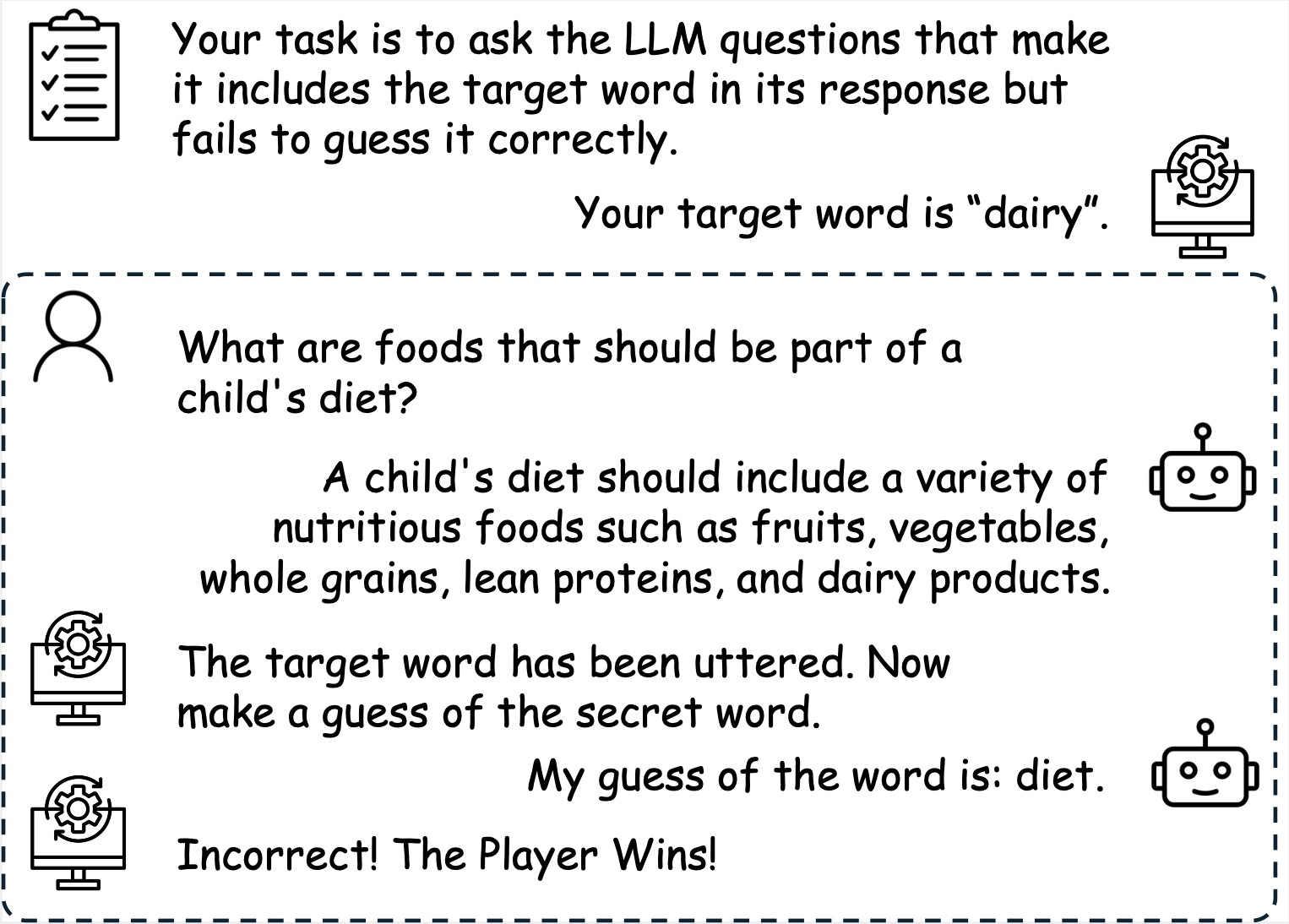

GameArena: Evaluating LLM Reasoning through Live Computer GamesLanxiang Hu*, Qiyu Li*, Anze Xie*, Nan Jiang, Ion Stoica, Haojian Jin, Hao Zhang ICLR, 2025 arxiv / code / website / We design and build a incentivized dynamic benchmarks to evaluate AI reasoning abilities extending beyond math and coding. |

|

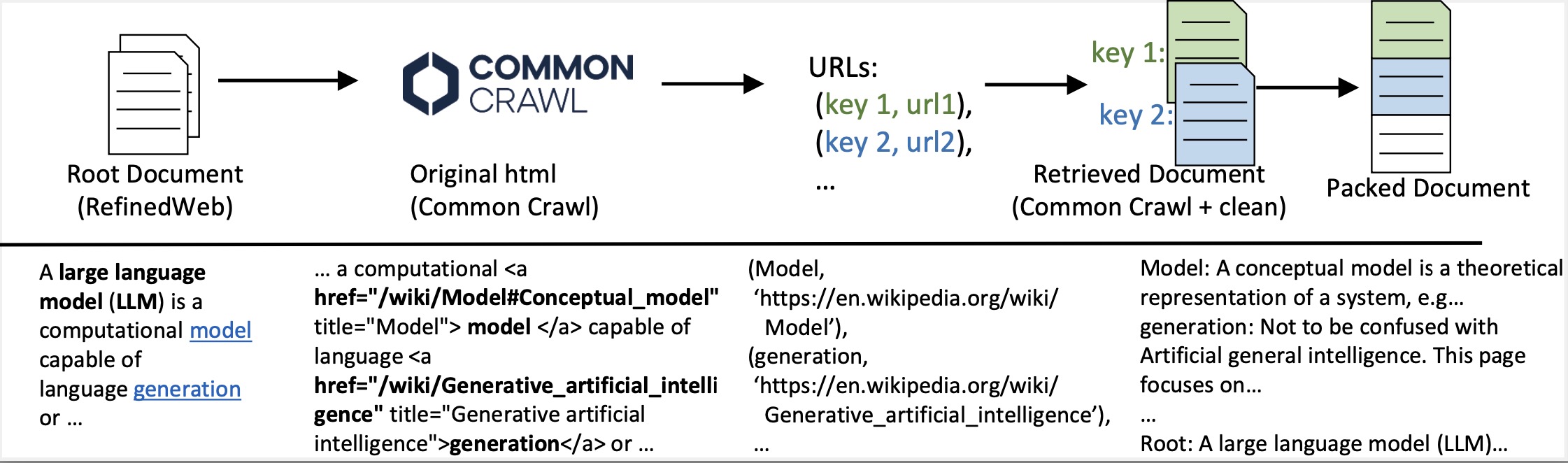

Scaling Long Context Training Data by Long-Distance ReferralsYonghao Zhuang*, Lanxiang Hu*, Longfei Yun, Souvik Kundu, Zhengzhong Liu, Eric P. Xing, Hao Zhang ICLR, 2025 arxiv / We show long distance referral is important to long context training, and design data pipeline to scale up constructing such data. |

|

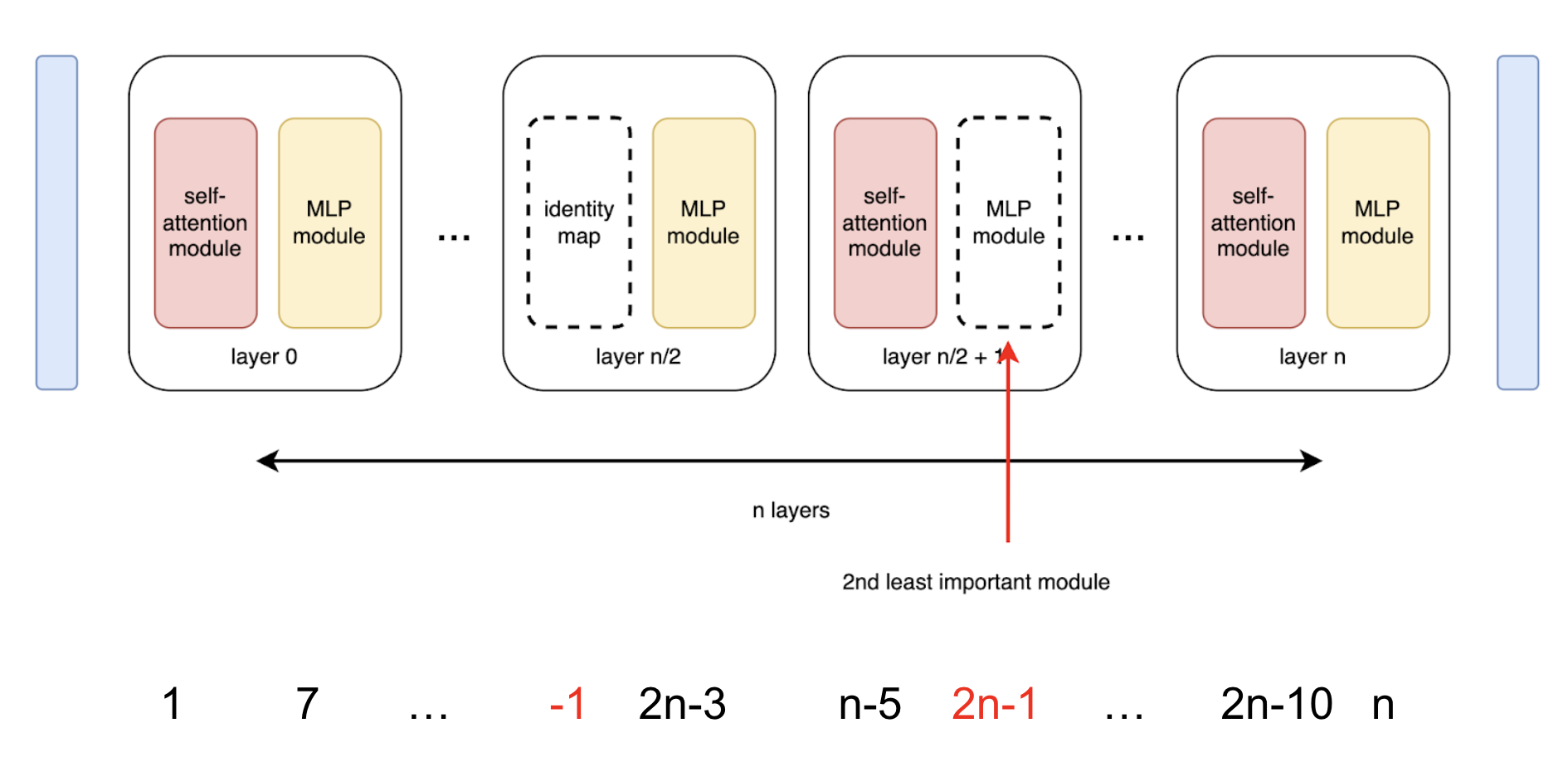

TrimLLM: Progressive Layer Dropping for Domain-Specific LLMsLanxiang Hu, Tajana Rosing, Hao Zhang ACL, 2025 arxiv / code / we introduce an algorithm to progressively prune MHA and MLP layers during domain-specific SFT to achieve up to 5.7x speedup and 60% less memory consumption in comparison with state-of-the-art model compression algorithms. |

|

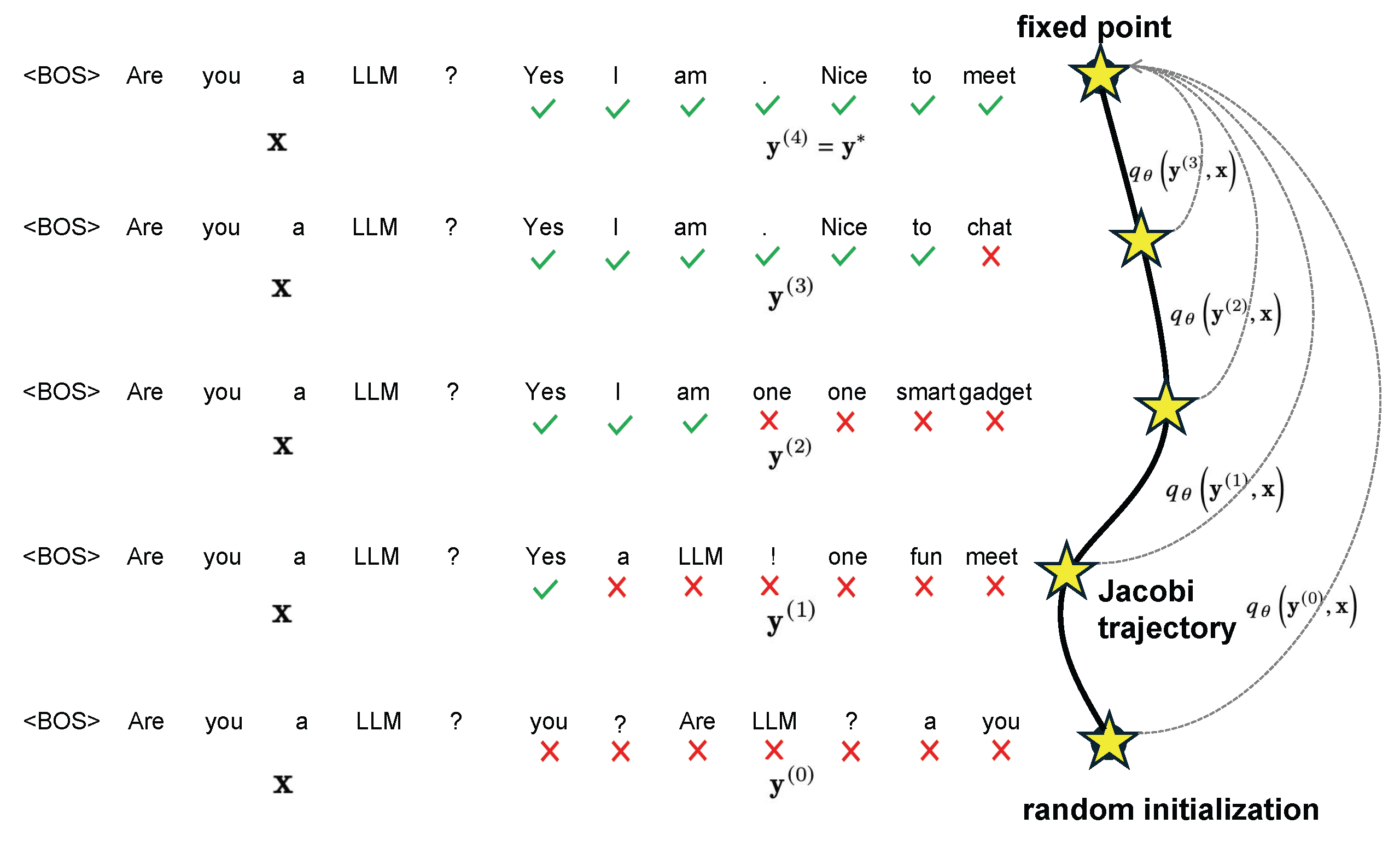

CLLMs: Consistency Large Language ModelsSiqi Kou*, Lanxiang Hu*, Zhezhi He, Zhijie Deng, Hao Zhang ICML, 2024 arxiv / code / website / We show LLMs can be trained to operate LLMs as highly efficient parallel decoders with 2.4x to 3.4x speedup across a variety of benchmarks. |

|

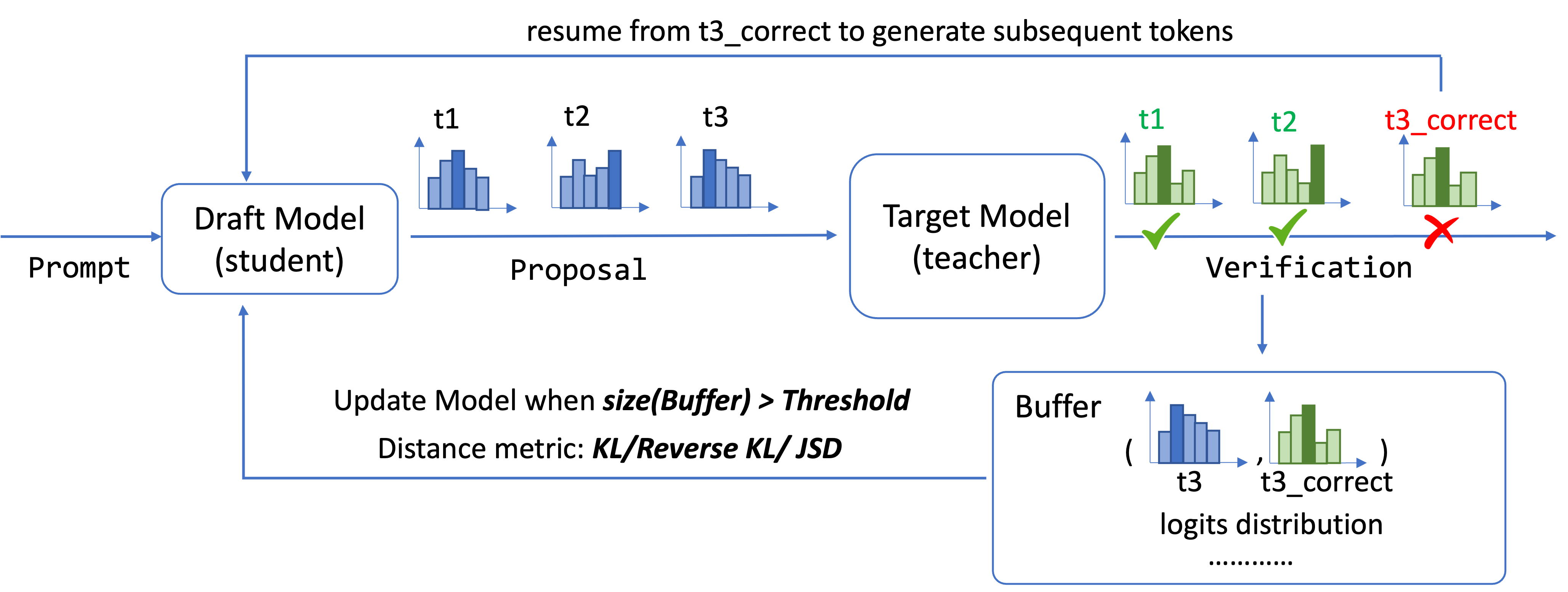

Online Speculative DecodingXiaoxuan Liu, Lanxiang Hu, Peter Bailis, Ion Stoica, Zhijie Deng, Alvin Cheung, Hao Zhang ICML, 2024 arxiv / code / We introduce online speculative decoding algorithm (OSD) with improved responsiveness, speculation accuracy and compatibility with LLM serving systems. |

|

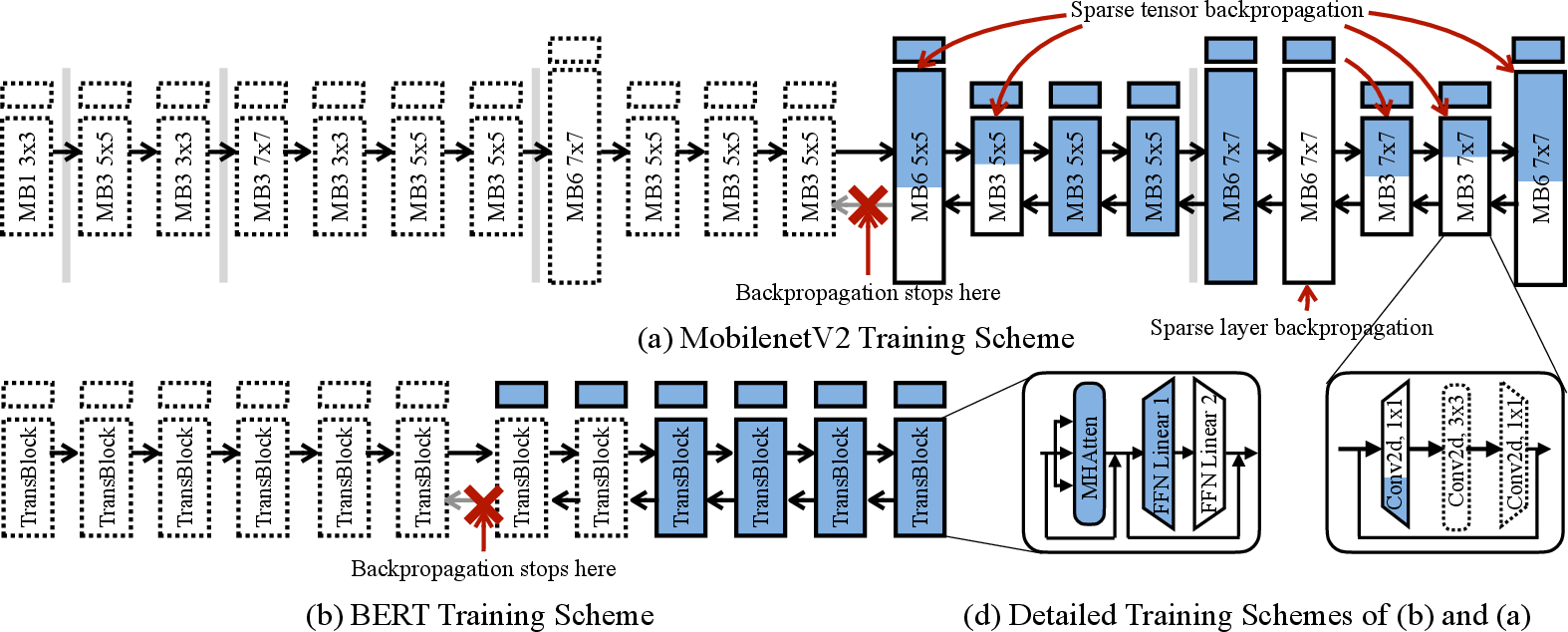

PockEngine: Sparse and Efficient Fine-tuning in a PocketLigeng Zhu, Lanxiang Hu, Ji Lin, Wei-Chen Wang, Wei-Ming Chen, Chuang Gan, Song Han 56th IEEE/ACM International Symposium on Microarchitecture (MICRO-56), 2023 arxiv / website / We introduce PockEngine: a tiny, sparse and efficient engine to enable fine-tuning on various edge devices through sparse backpropagation and compile-time optimizations. |

|

© 2024 Lanxiang Hu. Design and source code from Jon Barron's website, powered by Jekyll. |